The Keyword Linking Engine: custom vocabularies and multiple languages

WARNING: This engine is deprecated. Users are encouraged to use the EntityhubLinkingEngine engine instead.

The KeywordLinkingEngine is intended to be used to extract occurrences of Entities part of a Controlled Vocabulary in content parsed to the Stanbol Enhancer. To do this words appearing within the text are compared with labels of entities. The Stanbol Entityhub is used to lookup Entities based on their labels.

This documentation first provides information about the configuration options of this engine. This section is mainly intended for users of this engine. The remaining part of this document is rather technical and intended to be read by developers that want to extend this engine or want to know the technical details.

Configuration

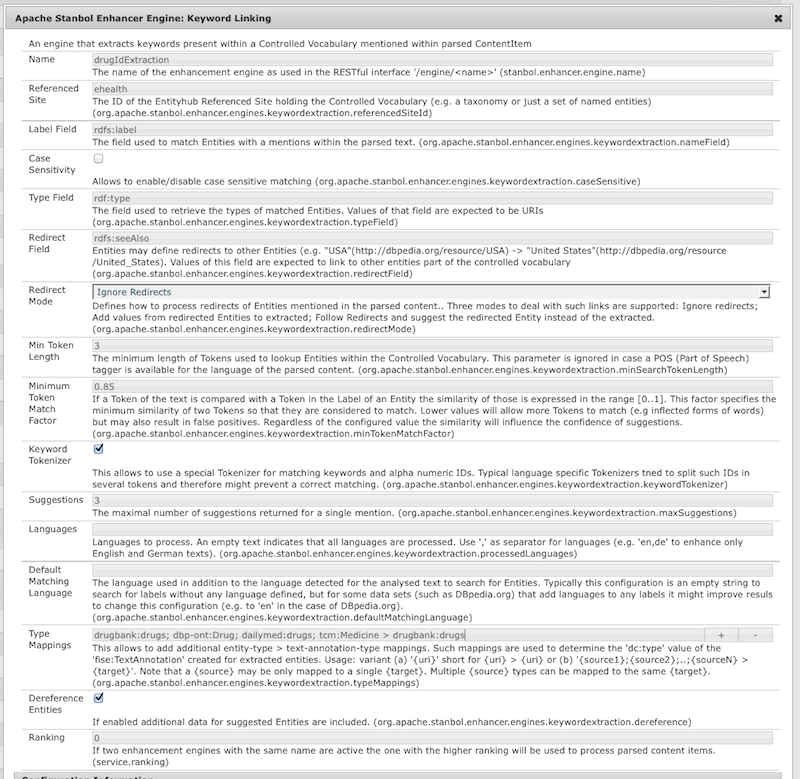

The KeywordLinkingEnigne provides a lot of configuration possibilities. This section provides describes the different option based on the configuration dialog as shown by the Apache Felix Webconsole.

The example in the scene shows an configuration that is used to extract Drugs base on various IDs (e.g. the ATC code and the nchi key) that are all stored as values of the skos:notation property. This example is used to emphasize on newer features like case sensitive mapping, keyword tokenizer and also customized type mappings. Similar configurations would be also need to extract product ids, ISBN number or more generally concepts of an thesaurus based on there notation.

Configuration Parameter

- Name (stanbol.enhancer.engine.name): The name of the Enhancement Engine. This name is used to refer an EnhancementEngine in EnhancementChains

- Referenced Site (org.apache.stanbol.enhancer.engines.keywordextraction.referencedSiteId): The name of the ReferencedSite of the Stanbol Entityhub that holds the controlled vocabulary to be used for extracting Entities. "entityhub" or "local" can be used to extract Entities managed directly by the Entityhub.

- Label Field (org.apache.stanbol.enhancer.engines.keywordextraction.nameField): The name of the property used to lookup Entities. Only a single field is supported for performance reasons. Users that want to use values of several fields should collect such values by an according configuration in the mappings.txt used during indexing. This usage scenario provides more information on this.

- Case Sensitivity (org.apache.stanbol.enhancer.engines.keywordextraction.caseSensitive): This allows to activate/deactivate case sensitive matching. It is important to understand that even with case sensitivity activated an Entity with the label such as "Anaconda" will be suggested for the mention of "anaconda" in the text. The main difference will be the confidence value of such a suggestion as with case sensitivity activated the starting letters "A" and "a" are NOT considered to be matching. See the second technical part for details about the matching process. Case Sensitivity is deactivated by default. It is recommended to be activated if controlled vocabularies contain abbreviations similar to commonly used words e.g. CAN for Canada.

- Type Field (org.apache.stanbol.enhancer.engines.keywordextraction.typeField): Values of this field are used as values of the "fise:entity-types" property of created "fise:EntityAnnotation"s. The default is "rdf:type".

- Redirect Field (org.apache.stanbol.enhancer.engines.keywordextraction.redirectField) and Redirect Mode (org.apache.stanbol.enhancer.engines.keywordextraction.redirectMode): Redirects allow to tell the KeywordLinkingEngine to follow a specific property in the knowledge base for matched entities. This feature e.g. allows to follow redirects from "USA" to "United States" as defined in Wikipedia. See "Processing of Entity Suggestions" for details. Possible valued for the Redirect Mode are "IGNORE" - deactivates this feature; "ADD_VALUES" - uses label, type informations of redirected entities, but keeps the URI of the extracted entity; "FOLLOW" - follows the redirect

- Min Token Length (org.apache.stanbol.enhancer.engines.keywordextraction.minSearchTokenLength): While the KeywordLinkingEngine preferable uses POS (part-of-speach) taggers to determine if a word should matched with the controlled vocabulary the minimum token length provides a fall back if (a) no POS tagger is available for the language of the parsed text or (b) if the confidence of the POS tagger is lower than the threshold.

- Minimum Token Match Factor (org.apache.stanbol.enhancer.engines.keywordextraction.minTokenMatchFactor): If a Token of the text is compared with a Token of an Entity Label the similarity of those two is expressed in the range [0..1]. The minimum token match factor specifies the minimum similarity of two Tokens so that they are considered to match. Lower similarity scores are not considered as match. This parameter is important as it e.g. allows inflected forms of words to match. However it also may result in false positives of similar words. users should note that the similarity score is also used for calculating the confidence. So similarity scores < 1 but higher than the configured minimum token match factor will reduce the confidence of suggested Entities.

- Keyword Tokenizer (org.apache.stanbol.enhancer.engines.keywordextraction.keywordTokenizer): This allows to use a special Tokenizer for matching keywords and alpha numeric IDs. Typical language specific Tokenizers tend to split such IDs in several tokens and therefore might prevent a correct matching. This Tokenizer should only be activated if the KeywordLinkingEngine is configured to match against IDs like ISBN numbers, Product IDs ... It should not be used to match against natural language labels.

- Suggestions (org.apache.stanbol.enhancer.engines.keywordextraction.maxSuggestions): The maximum number of suggested Entities.

- Languages (org.apache.stanbol.enhancer.engines.keywordextraction.processedLanguages) and Default Matching Language (org.apache.stanbol.enhancer.engines.keywordextraction.defaultMatchingLanguage): The first allows to specify languages that should be processed by this engine. This is e.g. useful if the controlled vocabulary only contains labels in for a specific language but does not formally specify this information (by setting the "xml:lang" property for labels). The default matching language can be used to work around the exact opposite case. As an example in DBpedia labels do get the language of the dataset they are extracted from (e.g. all data extracted from en.wikipedia.org will get "xml:lang=en"). The default matching language allows to tell the KeywordLinkingEngine to use labels of that language for matching regardless of the language of the parsed content. In the case of DBpedia this allows e.g. to match persons mentioned in an Italian text with the english labels extracted from en.wikipedia.org. Details about natural language processing features used by this engine are provided in the section "Multiple Language Support"

- Type Mappings (org.apache.stanbol.enhancer.engines.keywordextraction.typeMappings): The FISE enhancement structure (as used by the Stanbol Enhancer) distinguishes TextAnnotation and EntityAnnotations. The Keyword linking engine needs to create both types of Annotations: TextAnnotations selecting the words that match some Entities in the Controlled Vocabulary and EntityAnnotations that represent an Entity suggested for a TextAnnotation. The Type Mappings are used to determine the "dc:type" of the TextAnnotation based on the types of the suggested Entity. The default configuration comes with mappings for Persons, Organizations, Places and Concepts but this fields allows to define additional mappings. For details see the section "Type Mapping Syntax" and "Processing of Entity Suggestions".

- Dereference Entities (org.apache.stanbol.enhancer.engines.keywordextraction.dereference): If enabled this engine adds additional information about the suggested Entities to the Metadata of the enhanced content item.

- Ranking (service.ranking): This property is used of two engines do use the same Name. In such cases the one with the higher ranking will be used to enhance content items. Typically users will not need to change this.

Additionally the following properties can be configured via a configuration file:

- Minimum Found Tokens (org.apache.stanbol.enhancer.engines.keywordextraction.minFoundTokens): This allows to tell the KeywordLinking Engine how to deal with Entities that do not exactly match words in the text. Typical Examples are "George W. Bush" -> "George Walker Bush". This parameter allows the minimum number of tokens that need to match. The default value is '2'. Note that this does not apply for exact matches. Setting this to a high value can be used to force a mode that will only consider entities where all tokens of the label match the mention in the text.

- Minimum Pos Tag Probability (org.apache.stanbol.enhancer.engines.keywordextraction.minPosTagProbability): The minimum probability of a POS (part-of-speech) tag. Tags with a lower probability will be ignored. In such cases the configured value for the Min Token Length will apply. The value MUST BE in the range [0..1]

Type Mappings Syntax

The Type Mappings are used to determine the "dc:type" of the TextAnnotation based on the types of the suggested Entity. The field "Type Mappings" (property: org.apache.stanbol.enhancer.engines.keywordextraction.typeMappings) can be used to customize such mappings.

This field uses the following syntax

{uri} {source} > {target} {source1}; {source2}; ... {sourceN} > {target}

The first variant is a shorthand for {uri} > {uri} and therefore specifies that the {uri} should be used as 'dc:type' for TextAnnotations if the matched entity is of type {uri}. The second variant matches a {source} URI to a {target}. Variant three shows the possibility to match multiple URIs to the same target in a single configuration line.

Both 'ns:localName' and full qualified URIs are supported. For supported namespaces see the NamespaceEnum. Information about accepted (INFO) and ignored (WARN) type mappings are available in the logs.

Some Examples of additional Mappings for the e-health domain:

drugbank:drugs; dbp-ont:Drug; dailymed:drugs; sider:drugs; tcm:Medicine > drugbank:drugs diseasome:diseases; linkedct:condition; tcm:Disease > diseasome:diseases sider:side_effects dailymed:ingredients dailymed:organization > dbp-ont:Organisation

The first two lines map some will known Classes that represent drugs and diseases to 'drugbank:drugs' and 'diseasome:diseases'. The third and fourth line define 1:1 mappings for side effects and ingredients and the last line adds 'dailymed:organization' as an additional mapping to DBpedia Ontology Organisation.

The following mappings are predefined by the KeywordLinkingEngine.

dbp-ont:Person; foaf:Person; schema:Person > dbp-ont:Person dbp-ont:Organisation; dbp-ont:Newspaper; schema:Organization > dbp-ont:Organisation dbp-ont:Place; schema:Place; gml:_Feature > dbp-ont:Place skos:Concept

Multiple Language Support

The KeywordLinkingEngine supports the extraction of keywords in multiple languages. However, the performance and to some extend also the quality of the enhancements depend on how well a language is supported by the used NLP framework (currently OpenNLP). The following list provides a short overview about the different language specific component/configurations:

- Language detection: The KeywordLinkingEngine depends on the correct detection of the language by the LanguageIdentificationEngine. If no language is detected or this information is missing then "English" is assumed as default.

- Multi-lingual labels of the controlled vocabulary: Entities are matched based on labels of the current language and labels without any defined language. e.g. English labels will not be matched against German language texts. Therefore it is important to have a controlled vocabulary that includes labels in the language of the texts you want to enhance.

- Natural Language Processing support: The KeywordLinkingEngine is able to use Sentence Detectors, POS (Part of Speech) taggers and Chunkers. If such components are available for a language then they are used to optimize the enhancement process.

- Sentence detector: If a sentence detector is present the memory footprint of the engines improves, because Tokens, POS tags and Chunks are only kept for the currently active sentence. If no sentence detector is available the entire content is treated as a single sentence.

- Tokenizer: A (word) tokenizer is required for the enhancement process. If no specific tokenizer is available for a given language, then the OpenNLP SimpleTokenizer is used as default. The parameter Keyword Tokenizer can be used to force the usage of a special Tokenizer that is optimized for matching keyword. This Tokenizer ensures that alpha-numeric IDs are not tokenized to ensure correct matching of such tokens. If this option is enabled than any language specific Tokenizer will be ignored in favor of the KeywordTokenizer.

- POS tagger: POS (Part-of-Speech) taggers annotate tokens with their type. Because of the KeywordLinkingEngine is only interested in Nouns, Foreign Words and Numbers, the presence of such a tagger allows to skip a lot of the tokens and to improve performance. However POS taggers use different sets of tags for different languages. Because of that it is not enough that a POS tagger is available for a language there MUST BE also a configuration of the POS tags representing Nouns.

- Chunker: There are two types of Chunkers. First the Chunkers as provided by OpenNLP (based on statistical models) and second a POS tag based Chunker provided by the openNLP bundle of Stanbol. Currently the availability of a Chunker does not have a big influence on the performance nor the quality of the Enhancements.

Keyword extraction and linking workflow

Basically the text is parsed from the beginning to the end and words are looked up in the configured controlled vocabulary.

Text Processing

The AnalysedContent Interface is used to access natural language text that was already processed by a NLP framework. Currently there is only a single implementation based on the commons.opennlp TextAnalyzer utility. In general this part is still very focused on OpenNLP. Making it also usable together with other NLP frameworks would probably need some re-factoring.

The current state of the processing is represented by the ProcessingState. Based on the capabilities of the NLP framework for the current language it provides a the following set of information:

- AnalysedSentence: If a sentence detector is present, than this represent the current sentence of the text. If not, then the whole text is represented as a single sentence. The AnalysedSentence also provides access to POS tags and Chunks (if available)

- Chunk: If a chunker is present, then this represents the current chunk. Otherwise this will be null.

- Token: The currently processed word part of the chunk and the sentence.

- TokenIndex: The index of the currently active token relative to the AnalysedSentence.

Processing is done based on Tokens (words). The ProcessingState provides means to navigate to the next token. If Chunks are present tokens that are outside of chunks are ignored. Only 'processable' tokens are considered to lookup entities (see the next section for details). If a Token is processable is determined as follows

- Only Tokens within a Chunk are considered. If no Chunks are available all Tokens.

- If POS tags are available AND POS tags considered as NOUNS are configured (see PosTagsCollectionEnum) than POS tags are considered for deciding if a Token is processable

- The minimum POS tag probability is

0.667 - Tokens with a POS tag representing a NOUN and a probability >= minPosTagProb are marked as processable

- Tokens with a POS tag NOT representing a NOUN and a probability >= minPosTagProb/2 are marked as NOT processable

- The minimum POS tag probability is

- If POS tags are NOT available or the NOUN POS tags configuration is missing the minimum token length (org.apache.stanbol.enhancer.engines.keywordextraction.minSearchTokenLength) is used as fallback. This means that all Tokens equals or longer than this value are marked as processable.

This algorithm was introduced by STANBOL-658

Entity Lookup

A "OR" query with [1..MAX_SEARCH_TOKENS] processable tokens is used to lookup entities via the EntitySearcher interface. If the actual implementation cut off results, than it must be ensured that Entities that match both tokens are ranked first. Currently there are two implementations of this interface: (1) for the Entityhub (EntityhubSearcher) and (2) for ReferencedSites (ReferencedSiteSearcher). There is also an Implementation that holds entities in-memory, however currently this is only used for unit tests.

Queries do use the configured EntityLinkerConfig.getNameField() and the language of labels is restricted to the current language or labels that do not define any language.

Only "processable" tokens are used to lookup entities. If a token is processable is determined as follows:

- If POS tags are available the "Boolean processPOS(String posTag)" method of the AnalysedContent is used to check if a Token needs to be processed.

- If this method returns NULL or no POS tags are available, then all Tokens longer than EntityLinkerConfig.getMinSearchTokenLength() (default=3) are considered as processable.

Typically the next MAX_SEARCH_TOKENS processable tokens are used for a lookup. However the current Chunk/Sentence is never left in the search for processable tokens.

Matching of found Entities:

All labels (values of the EntityLinkerConfig.getNameField() field) in the language of the content or without any defined language are candidates for matches.

For each label that fulfills the above criteria the following steps are processed. The best result is used as the result of the whole matching process:

- Tokens (of the text) following the current position are searched within the label. This also includes non-processable Tokens.

- Processable Tokens MUST match with Tokens in the Label. A maximum number of EntityLinkerConfig.getMaxNotFound() non-processable Tokens may not match.

- Token order is important. Tokens in the Entity Label are allied to be skipped (e.g. the text 'Barack Obama' will match the label 'Barack Hussein Obama' because Hussein is allowed to be skipped. The other way around it would be no match because processable Tokens in the Text are not allied to be skipped)

- If the first Token of the Label is not matches preceding Tokens of the Text are matched against the Label. This is done to ensure that Entities that use adjectives in their labels (e.g. "great improvement", "Gute Deutschkenntnisse") are matched. In addition this also helps to match named entities (e.g. person names) as the first token of those mentions are sometimes erroneously classified adjectives by POS taggers.

- Tokens that appear in the wrong order (e.g. the text 'Obama, Barack' with the label 'Barack Obama' are matched with a factor of

0.7. Currently only exact matches are considered.

If two tokens match is calculated by dividing the longest matching part from the begin of the Token to the maximum length of the two tokens. e.g. 'German' would match with 'Germany' with 5/6=0.83. The result of this comparison is the token similarity. If this similarity is greater equals than the configured minimum token similarity factor (org.apache.stanbol.enhancer.engines.keywordextraction.minTokenMatchFactor) than those tokens are considered to match. The token similarity is also used for calculating the confidence.

Entities are Suggested if:

- a label does match exactly with the current position in the text. This is if all tokens of the Label match with the Tokens of the text. Note that tokens are considered to match if the similarity is greater equals than the minimum token match factor.

- partial matches are considered if more than EntityLinkerConfig.getMinFoundTokens() (default=2) processable tokens match. Non-processable tokens are not considered for this. This ensures that "Rupert Murdoch" is not suggested for "Rupert" but on the other hand "Barack Hussein Obama" is suggested for "Barack Obama".

The described matching process is currently directly part of the EntityLinker. To support different matching strategies this would need to be externalized into an own "EntityLabelMatcher" interface.

Processing of Entity Suggestions

In case there are one or more Suggestions of Entities for the current position within the text a LinkedEntity instance is created.

LinkedEntity is an object model representing the Stanbol Enhancement Structure. After the processing of the parsed content is completed, the LinkedEntities are "serialized" as RDF triples to the metadata of the ContentItem.

TextAnnotations as defined in the Stanbol Enhancement Structure do use the dc:type property to provide the general type of the extracted Entity. However suggested Entities might have very specific types. Therefore the EntityLinkerConfig provides the possibility to map the specific types of the Entity to types used for the dc:type property of TextAnnotations. The EntityLinkerConfig.DEFAULT_ENTITY_TYPE_MAPPINGS contains some predefined mappings. Note that the field used to retrieve the types of a suggested Entity can be configured by the EntityLinkerConfig. The default value for the type field is "rdf:type".

In some cases suggested entities might redirect to others. In the case of Wikipedia/DBpedia this is often used to link from acronyms like IMF to the real entity International Monetary Fund. But also some Thesauri define labels as own Entities with an URI and users might want to use the URI of the Concept rather than one of the label. To support such use cases the KeywordLinkingEngine has support for redirects. Users can first configure the redirect mode (ignore, copy values, follow) and secondly the field used to search for redirects (default=rdfs:seeAlso). If the redirect mode != ignore for each suggestion the Entities referenced by the configured redirect field are retrieved. In case of the "copy values" mode the values of the name, and type field are copied. In case of the "follow" mode the suggested entity is replaced with the first redirected entity.

Confidence for Suggestions

The confidence for suggestions is calculated based on the following algorithm:

Input Parameters

- max_matched: maximum number of the matched tokens of all suggestions e.g. the text contains "Barack Obama" -> 2

- matched: number of tokens that match for the current suggestion e.g. "Barack Hussein Obama" -> 2

- span: number of tokens selected by the current suggestion e.g. "Barack Hussein Obama" -> 2

- label_tokens: number of tokens of the matched label of the current entity (label_token) e.g. "Barack Hussein Obama" -> 3

The confidence is calculated as follows:

confidence = (match/max_matched)^2 * (matched/span) * (matched/label_tokens)

Some Examples:

- "Barack Hussein Obama" matched against the text "Barack Obama" results in a confidence of (2/2)^2 * (2/2) * (2/3) = 0,67

- "University Michigan" matched against the text "University of Michigan" results in a confidence of (2/2)^2 * (2/3) * (2/2) = 0,67

- "New York City" matched against the text "New York Rangers" - assuming that "New York Rangers" is the best match - results in a confidence of (2/3)^2 * (2/2) * (2/3) = 0,3; Note that the best match "New York Rangers" has max_matched=3 and gets a confidence of 1.

The calculation of the confidence is currently direct part of the EntityLinker. To support different matching strategies this would need to be externalized into an own interface.

Notes about the TaxonomyLinkingEngine

The KeywordLinkingEngine is a re-implementation of the TaxonomyLinkingEngine which is more modular and therefore better suited for future improvements and extensions as requested by STANBOL-303. As of STANBOL-506 this engine is now deprecated and will be deleted from the SVN.