Working with Custom Vocabularies

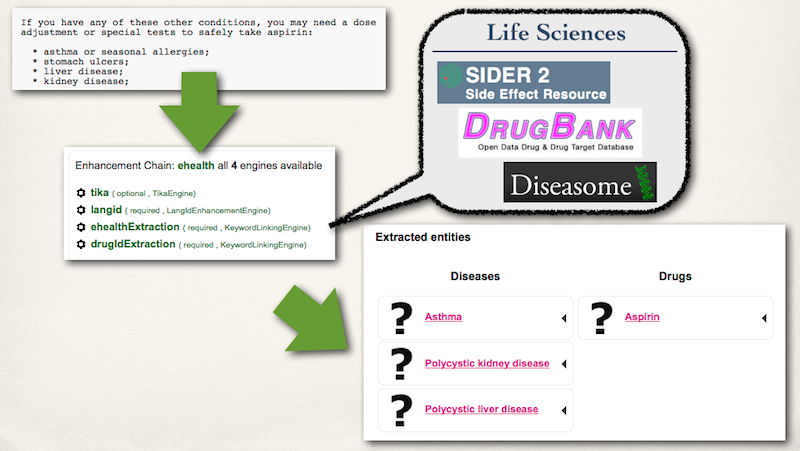

The ability to work with custom vocabularies is necessary for many use cases. The use cases range from being able to detect various types of named entities specific of a company or to detect and work with concepts from a specific domain - such as life science as shown by the following figure,

The aim of this usage scenario is to provide Apache Stanbol users with all the required knowledge to customize Apache Stanbol to be used in their specific domain. This includes

- Two possibilities to manage custom Vocabularies

- via the RESTful interface provided by a Managed Site or

- by using a ReferencedSite with a full local index

- Building full local indexes with the Entityhub Indexing Tool

- Importing Indexes to Apache Stanbol

- Configuring the Stanbol Enhancer to make use of the indexed and imported Vocabularies

Overview

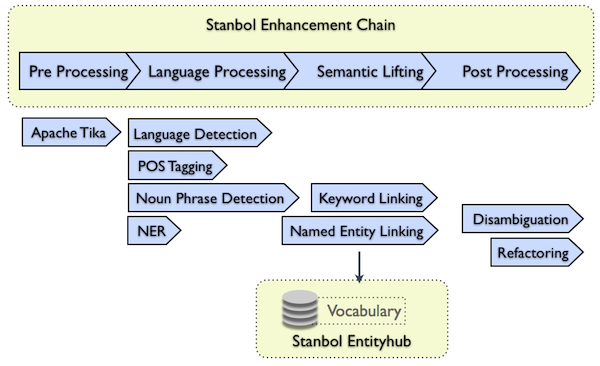

The following figure shows the typical Enhancement workflow that may start with some preprocessing steps (e.g. the conversion of rich text formats to plain text) followed by the Natural Language Processing phase. Next 'Semantic Lifting' aims to connect the results of text processing and link it to the application domain of the user. During Postprocessing those results may get further refined.

This usage scenario is all about the Semantic Lifting phase. This phase is most central to for how well enhancement results to match the requirements of the users application domain. Users that need to process health related documents will need to provide vocabularies containing life science related entities otherwise the Stanbol Enhancer will not perform as expected on those documents. Similar processing Customer requests can only work if Stanbol has access to data managed by the CRM.

This scenario aims to provide Stanbol users with all information necessary to use Apache Stanbol in scenarios where domain specific vocabularies are required.

Managing Custom Vocabularies with the Stanbol Entityhub

By default the Stanbol Enhancer does use the Entityhub component for linking Entities with mentions in the processed text. While Users may extend the Enhancer to allow the usage of other sources this is outside of the scope of this scenario.

The Stanbol Entityhub provides two possibilities to manage vocabularies

- Managed Sites: A fully read/write able storage for Entities. Once created users can use a RESTful interface to create, update, retrieve, query and delete entities.

- Referenced Site: A read-only version of a Site that can either be used as a local cache of remotely managed data (such as a Linked Data server) or use a fully local index of the knowledge base - the relevant case in the context of this scenario.

As a rule of thump users should prefer to use a Managed Site if the vocabulary does change regularly and those changes need to be reflected in enhancement results of processed documents. A Referenced Site is typically the better choice for vocabularies that do not change on a regular base and/or for users that what to use apply advanced rules while indexing a dataset.

Using a Entityhub Managed Site

How to use a Managed Site is already described in detail by the Documentation of Managed Sites. To configure a new Managed Site on the Entityhub users need to create two components:

- the Yard - the storage component of the Stanbol Entityhub. While there are multiple Yard implementations, when used for EntiyLinking the SolrYard implementation should be used. Second the

- the YardSite - the component that implements the ManagedSite interface.

After completing those two steps an empty Managed site should be ready to use available under

http://{stanbol-host}/entityhub/sites/{managed-site-name}/

and users can start to upload the Entities of the controlled vocabulary by using the RESTful interface such as

curl -i -X PUT -H "Content-Type: application/rdf+xml" -T {rdf-xml-data} \ "http://{stanbol-host}/entityhub/site/{managed-site-name}/entity"

In case you have opted to use a Managed Site for managing your entities you can now skip the next section until section 'B. Configure and use the index with the Apache Stanbol Enhancer'

Using a Entityhub Referenced Site

Referenced Sites are used by the Stanbol Entityhub to reference external knowledge bases. This can be done by configuring remote services for dereferencing and querying information, but also by providing a full local index of the referenced knowledge base.

When using a Referenced Site in combination with the Stanbol Enhancer it is highly recommended for performance considerations to provide a full local index. To create such local indexes Stanbol provides the Entityhub Indexing Tool. See the following section for detailed information on how to use this tool.

Building full local indexes with the Entityhub Indexing Tool

The Entityhub Indexing Tool allows to create full local indexes of knowledge bases that can be loaded to the Stanbol Entityhub as Referenced Sites. Users that do use Managed Sites may want to skip this section.

Users of the Entityhub Indexing Tool will typically need to complete the steps described in the following sub sections.

Step 1 : Compile and assemble the indexing tool

The indexing tool provides a default configuration for creating an Apache Solr index of RDF files (e.g. a SKOS export of a thesaurus or a set of foaf files).

To build the indexing tool from source - recommended - you will need to checkout Apache Stanbol form SVN (or download a source-release). Instructions for this can be found here. However if you want to skip this you can also obtain a binary version from the IKS development server (search the sub-folders of the different versions for a file named like "org.apache.stanbol.entityhub.indexing.genericrdf-*-jar-with-dependencies.jar").

In case you downloaded or "svn co" the source to {stanbol-source} and successfully build the source as described in the Tutorial you still need to assembly the indexing tool by

$ cd {stanbol-source}/entityhub/indexing/genericrdf/ $ mvn assembly:single

and move the assembled indexing tool from

{stanbol-source}/entityhub/indexing/genericrdf/target/ _ org.apache.stanbol.entityhub.indexing.genericrdf-*-jar-with-dependencies.jar

into a the directory you plan to use for the indexing process. We will refer to this directory as {indexing-working-dir}.

Step 2 : Create the index

Initialize the tool with

$ cd {indexing-working-dir} $ java -jar org.apache.stanbol.entityhub.indexing.genericrdf-*-jar-with-dependencies.jar init

This will create/initialize the default configuration for the Indexing Tool including (relative to {indexing-working-dir}):

/indexing/config: Folder containing the default configuration including the "indexing.properties" and "mappings.txt" file./indexing/resources: Folder with the source files used for indexing including the "rdfdata" folder where you will need to copy the RDF files to be indexed/indexing/destination: Folder used to write the data during the indexing process./indexing/dist: Folder where you will find the{name}.solrindex.zipandorg.apache.stanbol.data.site.{name}-{version}.jarfiles needed to install your index to the Apache Stanbol Entityhub.

After the initialization you will need to provide the following configurations in files located in the configuration folder ({indexing-working-dir}/indexing/config)

- Within the

indexing.propertiesfile you need to set the {name} of your index by changing the value of the "name" property. In addition you should also provide a "description". At the end of the indexing.properties file you can also specify the license and attribution for the data you index. The Apache Entityhub will ensure that those information will be included with any entity data returned for requests. - Optionally, if your data do use namespaces that are not present in prefix.cc (or the server used for indexing does not have internet connectivity) you can manually define required prefixes by creating/using the a

indexing/config/namespaceprefix.mappingsfile. The syntax is ''{prefix}\t{namespace}\n' where '{prefix} ... [0..9A..Za..z-_]' and '{namespace} ... must end with '#' or '/' for URLs and ':' for URNs'. - Optionally, if the data you index do use some none common namespaces you will need to add those to the

mapping.txtfile (here is an example including default and specific mappings for one dataset) - Optionally, if you want to use a custom SolrCore configuration the core configuration needs to be copied to the

indexing/config/{core-name}. Default configuration - to start from - can be downloaded from the Stanbol SVN and extracted to theindexing/config/folder. If the {core-name} is different from the 'name' configured in theindexing.propertiesthan the 'solrConf' parameter of the 'indexingDestination' MUST be set to 'solrConf:{core-name}'. After those configurations users can make custom adaptations to the SolrCore configuration used for indexing.

Finally you will also need to copy your source files into the source directory {indexing-working-dir}/indexing/resources/rdfdata. All files within this directory will be indexed. THe indexing tool support most common RDF serialization. You can also directly index compressed RDF files.

For more details about possible configurations, please consult the README.

Once all source files are in place, you can start the index process by running

$ cd {indexing-working-dir} $ java -Xmx1024m -jar org.apache.stanbol.entityhub.indexing.genericrdf-*-jar-with-dependencies.jar index

Depending on your hardware and on complexity and size of your sources, it may take several hours to built the index. As a result, you will get an archive of an Apache Solr index together with an OSGI bundle to work with the index in Stanbol. Both files will be located within the indexing/dist folder.

IMPORTANT NOTES:

-

The import of the RDF files to the Jena TDB triple store - used as source for the indexing - takes a lot of time. Because of that imported data are reused for multiple runs of the indexing tool. This has two important effects users need to be aware of:

- Already imported RDF files should be removed from the

{indexing-working-dir}/indexing/resources/rdfdatato avoid to re-import them on every run of the tool. NOTE: newer versions of the Entityhub indexing tool might automatically move successfully imported RDF files to a different folder. - If the RDF data change you will need to delete the Jena TDB store so that those changes are reflected in the created index. To do this delete the

{indexing-working-dir}/indexing/resources/tdbfolder

- Already imported RDF files should be removed from the

-

Also the destination folder

{indexing-working-dir}/indexing/destinationis NOT deleted between multiple calls to index. This has the effect that Entities indexed by previous indexing calls are not deleted. While this allows to index a dataset in multiple steps - or even to combine data of multiple datasets in a single index - this also means that you will need to delete the destination folder if the RDF data you index have changed - especially if some Entities where deleted.

Step 3 : Initialize the index within Apache Stanbol

We assume that you already have a running Apache Stanbol instance at http://{stanbol-host} and that {stanbol-working-dir} is the working directory of that instance on the local hard disk. To install the created index you need to

- copy the "{name}.solrindex.zip" file to the

{stanbol-working-dir}/stanbol/datafilesdirectory (NOTE if you run the 0.9.0-incubating version the path is{stanbol-working-dir}/sling/datafiles). - install the

org.apache.stanbol.data.site.{name}-{version}.jarto the OSGI environment of your Stanbol instance e.g. by using the Bundle tab of the Apache Felix web console athttp://{stanbol-host}/system/console/bundles

You find both files in the {indexing-working-dir}/indexing/dist/ folder.

After the installation your data will be available at

http://{stanbol-instance}/entityhub/site/{name}

You can use the Web UI of the Stanbol Enhancer to explore your vocabulary. Note, that in case of big vocabulary it might take some time until the site becomes functional.

Configuring the Stanbol Enhancer for your custom Vocabularies

This section covers how to configure the Apache Stanbol Enhancer to recognize and link entities of your custom vocabulary with processed documents.

Generally there are two possible ways you can use to recognize entities of your vocabulary:

- Named Entity Linking: This first uses Named Entity Recoqunition (NER) for spotting "named entities" in the text and second try to link those named entities with entities defined in your vocabulary. This approach is limited to entities with the type person, organization and places. So if your vocabulary contains entities of other types, they will not be recognized. In addition it also requires the availability of NER for the language(s) of the processed documents.

- Entity Linking: This uses the labels of entities in your vocabulary for the recognition and linking process. Natural Language Processing (NLP) techniques such as part-of-speach (POS) detection can be used to improve performance and results but this works also without NLP support. As extraction and linking is based on labels mentioned in the analyzed content this method has no restrictions regarding the types of your entities.

For more information about this you might also have a look at the introduction of the multi lingual usage scenario.

TIP: If you are unsure about what to use you can also start with configuring both options to give it a try.

Depending on if you want to use named entity linking or entity linking the configuration of the enhancement chain and the enhancement engine making use of your vocabulary will be different. The following two sub-sections provide more information on that.

Configuring Named Entity Linking

In case named entity linking is used the linking with the custom vocabulary is done by the Named Entity Tagging Engine. For the configuration of this engine you need to provide the following parameters

- The "name" of the enhancement engine. It is recommended to use "{name}Linking" - where {name} is the name of the Entityhub Site (ReferenceSite or ManagedSite).

- The name of the referenced site holding your vocabulary. Here you have to configure the {name}.

- Enable/disable persons, organizations and places and if enabled configure the

rdf:typeused by your vocabulary for those type. If you do not want to restrict the type, you can also leave the type field empty. - Define the property used to match against the named entities detected by the used NER engine(s).

For more detailed information please see the documentation of the Named Entity Tagging Engine.

Note, that for using named entity linking you need also ensure that an enhancement engine that provides NER (Named Entity Recoqunition) is available in the enhancement chain. See Stanbol NLP processing Language Support section for detailed information on Languages with NER support.

The following Example shows a enhancement chain for named entity linking based on OpenNLP and CELI as NLP processing modules

- "langdetect" - Language Detection Engine - to detect the language of the parsed content - a pre-requirement of all NER engines

- "opennlp-ner" - for NER support in English, Spanish and Dutch via the Named Entity Extraction Enhancement Engine

- "celiNer" - for NER support in French and Italien via the CELI NER engine

- "{name}Linking - the Named Entity Tagging Engine for your vocabulary as configured above.

Both the weighted chain and the list chain can be used for the configuration of such a chain.

Configuring Entity Linking

First it is important to note the difference between Named Entity Linking and Entity Linking. While Named Entity Linking only considers Named Entities detected by NER (Named Entity Recognition) Entity Linking does work on Words (Tokens). As NER support is only available for a limited number of languages Named Entity Linking is only an option for those languages. Entity Linking only require correct tokenization of the text. So it can be used for nearly every language. However NOTE that POS (Part of Speech) tagging will greatly improve quality and also speed as it allows to only lookup Nouns. Also Chunking (Noun Phrase detection), NER and Lemmatization results are considered by Entity Linking to improve vocabulary lookups. For details see the documentation of the Entity Linking Process.

The second big difference is that Named Entity Linking can only support Entity types supported by the NER modles (Persons, Organizations and Places). Entity Linking does not have this restriction. This advantage comes also with the disadvantage that Entity Lookups to the Controlled Vocabulary are only based on Label similarities. Named Entity Linking does also use the type information provided by NER.

To use Entity Linking with a custom Vocabulary Users need to configure an instance of the Entityhub Linking Engine or a FST Linking engine. While both of those Engines provides 20+ configuration parameters only very few of them are required for a working configuration.

- The "Name" of the enhancement engine. It is recommended to use something like "{name}Extraction" or "{name}-linking" - where {name} is the name of the Entityhub Site

- The link to the data source

- in case of the Entityhub Linking Engine this is the name of the "Managed- / Referenced Site" holding your vocabulary - so if you followed this scenario you need to configure the {name}

- in case of the FST linking engine this is the link to the SolrCore with the index of your custom vocabulary. If you followed this scenario you need to configure the {name} and set the field name encoding to "SolrYard".

- The configuration of the field used for linking

- in case of the Entityhub Linking Engine the "Label Field" needs to be set to the URI of the property holding the labels. You can only use a single field. If you want to use values of several fields you need to adapt your indexing configuration to copy the values of those fields to a single one (e.g. by adding

skos:prefLabel > rdfs:labelandskos:altLabel > rdfs:labelto the{indexing-working-dir}/indexing/config/mappings.txtconfig. - in case of the FST Linking engine you need to provide the FST Tagging Configuration. If you store your labels in the

rdfs:labelfield and you want to support all languages present in your vocabulary use*;field=rdfs:label;generate=true. NOTE thatgenerate=trueis required to allow the engine to (re)create FST models at runtime.

- in case of the Entityhub Linking Engine the "Label Field" needs to be set to the URI of the property holding the labels. You can only use a single field. If you want to use values of several fields you need to adapt your indexing configuration to copy the values of those fields to a single one (e.g. by adding

- The "Link ProperNouns only": If the custom Vocabulary contains Proper Nouns (Named Entities) than this parameter should be activated. This options causes the Entity Linking process to not making queries for commons nouns and by that receding the number of queries agains the controlled vocabulary by ~70%. However this is not feasible if the vocabulary does contain Entities that are common nouns in the language.

- The "Type Mappings" might be interesting for you if your vocabulary contains custom types as those mappings can be used to map 'rdf:type's of entities in your vocabulary to 'dc:type's used for 'fise:TextAnnotation's - created by the Apache Stanbol Enhancer to annotate occurrences of extracted entities in the parsed text. See the type mapping syntax and the usage scenario for the Apache Stanbol Enhancement Structure for details.

The following Example shows an Example of an enhancement chain using OpenNLP for NLP

- "langdetect" - Language Detection Engine - to detect the language of the parsed content - a pre-requirement of all NER engines

- opennlp-sentence - Sentence detection with OpenNLP

- opennlp-token - OpenNLP based Word tokenization. Works for all languages where white spaces can be used to tokenize.

- opennlp-pos - OpenNLP Part of Speech tagging

- opennlp-chunker - The OpenNLP chunker provides Noun Phrases

- "{name}Extraction - the Entityhub Linking Engine or FST Tagging Configuration configured for the custom vocabulary.

Both the weighted chain and the list chain can be used for the configuration of such a chain.

The documentation of the Stanbol NLP processing module provides detailed Information about integrated NLP frameworks and suupported languages.

How to use enhancement chains

In the default configuration the Apache Stanbol Enhancer provides several enhancement chains including:

1) a "default" chain providing Named Entity Linking based on DBpedia and Entity Linking based on the Entityhub 2) the "language" chain that is intended to be used to detect the language of parsed content. 3) a "dbpedia-proper-noun-linking" chain showing Named Entity Linking based on DBpedia

Change the enhancement chain bound to "/enhancer"

The enhancement chain bound to

http://{stanbol-host}/enhancer

is determined by the following rules

- the chain with the name "default". If more than one chain is present with that name, than the above rules for resolving name conflicts apply. If none,

- the chain with the highest "service.ranking". If several have the same ranking,

- the chain with the lowest "service.id".

You can change this by configuring the names and/or the "service.ranking" of the enhancements chains. Note, that (2) and (3) are also used to resolve name conflicts of chains. If you configure two enhancement chains with the same name, only the one with the highest "service.ranking" and lowest "service.id" will be accessible via the restful API.

Examples

While this usage scenario provides the basic information about how to index/use custom vocabularies there are a lot of additional possibilities to configure the indexing process and the enhancement engines.

If you are interested in the more advanced options, the following resources/examples might be of interest to you.

- Readme of the generic RDF indexing tool (see also "{stanbol-source-root}/entityhub/indexing/genericrdf" if you have obtained the source code of Apache Stanbol).

- eHealth example: This provides an indexing and enhancement engine configuration for 4 datasets of the life science domain. It goes into some of the details - such as customized Solr schema.xml configuration for the Apache Stanbol Entityhub; Keyword Linking Engine configurations optimized for extracting alpha-numeric IDs; using LD-Path to merge information of different datasets by following owl:sameAs relations; ... (see also "{stanbol-trunk}/demo/ehealth" if you have checked out the trunk Apache Stanbol). In addition this example may also provide some information on how to automate some of the steps described here by using shell scripts and maven.

- In addition to the Generic RDF Indexing Tool there are also two other special versions for dbpedia ("{stanbol-trunk}/entityhub/indexing/dbpedia") and DBLP ("{stanbol-trunk}/entityhub/indexing/dblp"). While you will not want to use this version to index your vocabularies the default configurations of those tools might still provide some valuable information.

Demos and Resources

The IKS development server runs an rather advanced configuration of Stanbol with a lot of custom datasets and according Enhancement Chain configuration. You can access this Stanbol instance at http://dev.iks-project.eu:8081/. In addition this server also hosts a set of prebuilt indexes