Working with Multiple Languages

To understand multi lingual support with Apache Stanbol one needs to consider that Apache Stanbol supports two different workflows for extracting entities from parsed text:

- Named Entity Linking: This first uses Named Entity Recoqunition (NER) for spotting Entities and second linked found Named Entities with Entities defined by the Controlled Vocabulary (e.g. DBpedia.or). For the NER step the NamedEntityExtraction, the CELI NER engine - using the linguagrid.org service or the OpenCalais /work/workspace/stanbol-website/content/stanbol/docs/trunk/enhancer/engines/refactorengine.mdtextcan be used. The linking functionality is implemented by the NamedEntityTaggingEngine. Multi lingual support depends on the availability of NER models for a language. Note also that separate models are required for each entity type. Typical supported types are Persons, Organizations and Places.

- Keyword Linking: entity label based spotting and linking of Entities as implemented by the KeywordLinkingEngine. Natural Language Processing (NLP) techniques such as Part-of-Speach (POS) processing are used to improve performance and result of the extraction process but are not a absolute requirement. As extraction only requires a label this method is also independent of the types of the Entities.

The following Languages are supported for NER - and can therefore be used for Named entity Linking:

- English (via NamedEntityTaggingEngine, OpenCalais)

- Spansh (via NamedEntityTaggingEngine, OpenCalais)

- Dutch ((via NamedEntityTaggingEngine)

- French (via CELI NER engine, OpenCalais)

- Italien (via CELI NER engine)

NOTE: The CELI and OpenCalais engine require users to create an Account with the according services. In addition analyzed Content will be sent to those services!

For the following languages NLP support is available to improve results when using the Keyword Extraction Engine:

- Danish

- Dutch

- English

- German

- Portuguese

- Spanish (added by STANBOL-688

- Swedish

Configuration steps

This describes the typical configuration steps required for multi lingual text processing with Apache Stanbol.

- Ensure that labels for the {language(s)} are available in the controlled vocabulary: By default labels with the given language and with no defined language will be used for linking.

- Add language models to your Stanbol instance: This includes general NLP models, NER models and possible the configuration of external services such as CELI or OpenCalais

- Configure the Named Entity Linking / Keyword Linking chain(s)

- ensure language detection support (e.g by using the Language Identification Engine

- decide to use (1) Named Entity Linking or (2) Keyword Linking based on the supported/required languages and the supported/present types of Entities in the controlled vocabulary

- configure the required Enhancement Engines and one or more Enhancement Chain for processing parsed content.

Install your multi lingual controlled vocabulary

If you want to link Entities in a given language you MUST ensure that there are labels in those languages present in the controlled vocabulary you want link against. It is also possible to tell Stanbol that labels are valid regardless of the language by adding labels without a language tag.

In case you want to link against your own vocabulary you will need to create your own index at this point. If you want to use an already indexed dataset you will need to install those to your Stanbol Environment by:

- copying the

{dataset}.solr.zipfile to{stanbol-working-dir}/stanbol/datafilesdirectory - installing the

org.apache.stanbol.data.site.{dataset}-1.0.0.jarbundle (e.g. by using the Bundle Tab of the Apache Felix Web Console - http://{host}:{port}/system/console/bundle).

NOTES:

- Indexed datasets can be found at the download section of the IKS Development Server

- In case of DBpedia the installation of the

org.apache.stanbol.data.site.dbpedia-1.0.0.jarbundle is NOT required, because DBpedia is already included in the default configuration of the launcher.

Build and add the necessary language bundles

Users of the full-war or full launcher can skip this as all available language bundles are included by default. In case you use the stable or a custom build launchers you will need to manually provide the required language models.

In principle there are two possibilities to add language processing and NER models to your Stanbol instance:

- you can use the OSGI bundles: Those uses artifactIds like

org.apache.stanbol.data.opennlp.lang.{language}-.jarandorg.apache.stanbol.data.opennlp.ner.{language}-.jarand can be found under{stanbol-root}/data/opennlp/[ner|lang]/{language}in the Apache Stanbol source - you can obtain the OpenNLP language models yourself and copy them to the

{stanbol-working-dir}/stanbol/datafilesfolder.

While the later provides more flexibility it also requires a basic understanding of the OpenNLP models and the processing workflow the KeywordLinkingEngine.

Configuring Language Identification Support

By default Apache Stanbol uses the Language Identification Engine that is based on the language identification functionality provided by Apache Tika. As an alternative there is also a language identification engine that uses linguagrid.org.

If you configure your own Enhancement Chain it is important to use one of those Engines and to ensure that it processes the content before the other engines referenced in this document.

Configure Named Entity Linking

To use Named Entity Linking users need to add at least two Enhancement Engines to the current Enhancement Chain

- NER Engine: possibilities include

- NamedEntityTaggingEngine - default name "

ner" CeliNamedEntityExtractionEnhancementEngine- default name "celiNer": To use this Engine you need to configure a "License Key" or to activate the usage of the Test Account. After providing this configuration you will need to manually disable/enable this engine to bring it from "unsatisfied" to the "active" state.- OpenCalais - default name "

opencalais": To use this Engine you need to configure you OpenCalais license key. You should also activate the NER only mode if you used it for this purpose. After providing this configuration you will need to manually disable/enable this engine to bring it from "unsatisfied" to the "active" state.

- NamedEntityTaggingEngine - default name "

- Entity Linking: possibilities include

- Named Entity Tagging Engine: This engine allows to create multiple instances for different controlled vocabularies. The default configuration of the Stanbol Launchers include an instance that is configured to link Entities form DBpedia.org. To link to your own datasets you will need to create/configure your own instances of this engine by using the Configuration Tab of the Apache Felix WebConsole - http://{host}:{port}/system/console/configMgr.

- Geonames Enhancement Engine: Uses the web services provided by geonames.org to link extracted Places. To use this Engine you need to configure your geonames "License Key" or to activate the anonymous geonames.org service. After providing this configuration you will need to manually disable/enable this engine to bring it from "unsatisfied" to the "active" state.

It is important to note that one can include multiple NER and Entity Linking Engines in a single Enhancement Chain. A typical Example would be

- "langid" - the required language identification (see previous section)

- "ner" - for NER support in English, Spanish and Dutch)

- "celiNer" - for NER support in French and Italien)

- "dbpediaLinking" - default configuration of the Named Entity Tagging Engine supporting linking with Entities defined by DBpedia.org

- "{youLinking}" - one or several more Named Entity Tagging Engine supporting linking against your Vocabularies (e.g. customers, employees, project partner, suppliers, competitors …)

Configure KeywordLinking

To use Keyword Linking one needs only to create/configure an instance of the KeywordLinkingEngine and add it to the current Enhancement Chain.

The following describe the different Options provided by the KeywordLinkingEngine when configured via the Configuration Tab of the Apache Felix WebConsole - http://{host}:{port}/system/console/configMgr.

- Name: The name of the Engine as referenced in the configuration of the Enhancement Chain

- Referenced Site: The ID of the Entityhub Referenced Site holding the Controlled Vocabulary. The referenced site id is the name of the referenced site as included in the URL - http://{stanbol-instance}/entityhub/site/{referenced-site-id}

- Label Field: The field used to match Entities with a mentions within the parsed text. For well known namespaces you can use "{prefix}:{localName}" instead of the full URI

- Case Sensitivity: Allows to enable case sensitive matching of labels. This allows to work around problems with suggesting abbreviations like "AND" for mentions of the english stop word "and".

- Type Field: The field used to retrieve the types of matched Entities. The values of this field are added to the 'fise:entity-type' property of created 'fise:EntityAnnotation's.

- Redirect Field: Entities may define redirects to other Entities (e.g. "USA"(http://dbpedia.org/resource/USA) -> "United States"(http://dbpedia.org/resource/United_States). Values of this field are expected to link to other entities part of the controlled vocabulary

- Redirect Mode: Defines how to process redirects of Entities mentioned in the parsed content.. Three modes to deal with such links are supported: Ignore redirects; Add values from redirected Entities to extracted; Follow Redirects and suggest the redirected entity instead of the extracted.

- Min Token Length: The minimum length of Tokens used to lookup Entities within the Controlled Vocabulary. This parameter is ignored in case a certain POS (Part of Speech) tag is available.

- Keyword Tokenizer: Forces the use of a word tokenizer that is optimized for Alpha numeric keys such as ISBN numbers, product codes ...

- Suggestions: The maximal number of suggestions returned for a single mention.

- Languages to process: An empty text indicates that all languages are processed. Use ',' as separator for languages (e.g. 'en,de' to enhance only English and German texts).

- Default Matching Language: The language used in addition to the language detected for the analysed text to search for Entities. Typically this configuration is an empty string to search for labels without any language defined, but for some data sets (such as DBpedia.org) that add languages to any labels it might improve results to change this configuration (e.g. to 'en' in the case of DBpedia.org).

- Type Mappings: This allows to configure additional mappings for 'dc:type' values added to created 'fise:TextAnnotation's

- Dereference Entities: This allows to include typical properties of linked Entities within the enhancement results. This engine currently includes only specific properties including the configured "Type Field", "Redirect Field" and "Label Field".

Read the technical description of this Enhancement Engine to learn about more configuration options.

Note that an Enhancement Chain may also contain multiple instances of the KeywordLinkingEngine. It is also possible to mix Named Entity Linking and Keyword Linking in a single chain e.g. to link Persons/Organizations and Places of DBPedia and any kind of Entities defined in your custom vocabulary. Such an Enhancement Chain could look like:

- "langid" - the required language identification (see previous section)

- "ner" - for NER support in English, Spanish and Dutch)

- "celiNer" - for NER support in French and Italien)

- "dbpediaLinking" - default configuration of the Named Entity Tagging Engine supporting linking with Entities defined by DBpedia.org

- "youVocKeyqord - custom configuration of the KeywordLinkingEngine configured to your controlled vocabulary.

Configure the Enhancement Chain

The Apache Stanbol Enhancer supports multiple Enhancement Chain. Those chains allow users to configure what EnhancementEngines are used in which order by the Stanbol Enhancer to process content posted to http://{stanbol-instance}/enhancer/chain/{chain-name}.

Enhancement Chains are created/configured by using the Configuration Tab of the Apache Felix WebConsole - http://{host}:{port}/system/console/configMgr. Users can choose one of the following three Chain implementation: "Weighted Chain", "List Chain" or "Graph Chain". While all three can be used for chains as referenced by this usage scenarios the "Weighted Chain" is typically the easiest to use as it auto sorts the Engines regardless of the configuration order provided by the user.

A Enhancement Chain configuration consist of three parameter:

- Name: The name of the chain as used in the RESTful interface - http://{stanbol-instance}/enhancer/chain/{chain-name}

- Engines: The name of the engine(s) used by this Chain. The order of the configuration is only used by the "List Chain". "{engine-name};optional" can be used to specify that this Chain can still be used if this engine is currently not available or fails to process a content item.

- Ranking: If there are two Enhancement Chains with the same name, than the one with the higher ranking will be executed

See also the documentation for details on enhancement chains.

Results

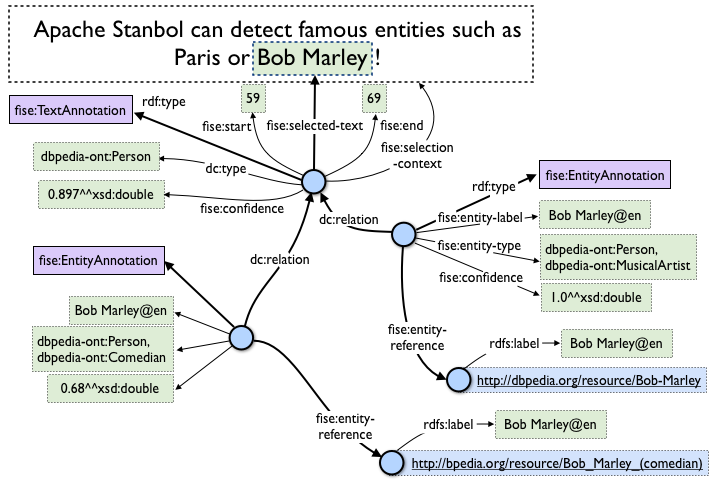

Extracted Entities will be formally describend in the RDF enhancement results of the Stanbol Enhancer by

- fise:TextAnnotation: The occurrence of the extracted entity within the Text. Also providing the general nature - value of the 'dc:type' property - of the entity. In case of Named Entity Linking TextAnnotations represent the Named Entities extracted by the used NER engine(s)

- fise:EntityAnnotation: Entities of the configured controlled vocabulary suggested for one or more 'fise:TextAnnotation's - value(s) of the 'dc:relation' property.

The following figure provides an overview about the knowledge structure.

Examples

This article from October 2011 describes how to deal with multilingual texts.